Faster Insights with AI: Building an AI Voice Assistant to Scale User Interviews

How we built Emily, our voice AI researcher, to automate user interviews and gather insights at scale — breaking free from traditional research bottlenecks.

AI has transformed how we prototype and conduct automated user testing. AI development tools like Loveable, Cursor, and Claude have made it possible to spin up working prototypes in hours rather than weeks. Product teams are iterating faster than ever before.

But there's a gap in this AI-powered acceleration: user research has been left behind, unless you want to spend $$$$.

While we're building faster, we're not learning faster. Teams are still stuck in the same research bottlenecks that existed five years ago.

The User Research Bottleneck in Modern Product Development

Most product decisions don’t have direct user-research behind them. Not because teams don't value user insights, but because traditional research methods create impossible bottlenecks that don't match the pace of modern product development.

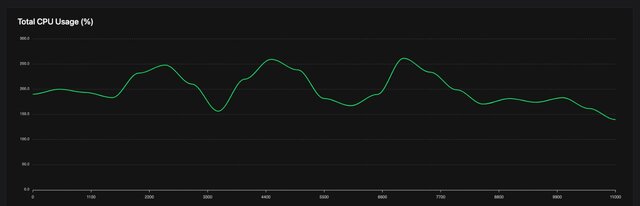

We experienced this firsthand with our WhatLingo app. We had a tricky WhatsApp linking flow that our analytics showed was failing, users were dropping over a few specific steps.

The data told us what was happening, but not why. We needed to watch users struggle through the flow and hear their frustrations in real-time. But doing user outreach, scheduling interviews, coordinating calendars, and setting up screen sharing for what should have been a 10-minute conversation? There had to be a better way.

Voice AI research is available any time, anywhere

Why We Chose Voice AI for User Research

So we have decided to test out Voice AI research to see if we can address some of the core blockers that make teams put off user research:

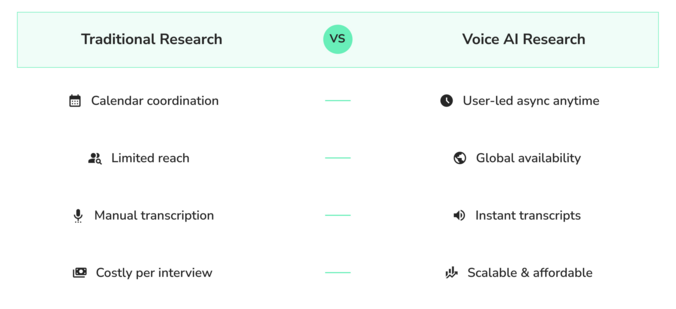

- User schedule & geographic limitations: Users can engage when convenient for them, not when slots are available

- Calendar scheduling bottlenecks: One researcher can only conduct so many interviews a day to a good level.

- Analysis delays: We can do analysis as each interview comes in and then use AI to find patterns, rather than having to do it all in one block like with traditional interviews

- Cost barriers: Each traditional interview costs time, money, and coordination overhead

We believe that by automating as much as possible on the ‘admin’ side of the user research, it will allow us to focus on the human-necessary part, reviewing the transcript and creating the analysis.

We experienced this firsthand with our WhatLingo app. We had a tricky WhatsApp linking flow that our analytics showed was failing, users were dropping over a few specific steps.

The data told us what was happening, but not why. We needed to watch users struggle through the flow and hear their frustrations in real-time. But doing user outreach, scheduling interviews, coordinating calendars, and setting up screen sharing for what should have been a 10-minute conversation? There had to be a better way.

So we have decided to test out Voice AI research to see if we can address some of the core blockers that make teams put off user research:

- User schedule & geographic limitations: Users can engage when convenient for them, not when slots are available

- Time in the calendar bottlenecks: One researcher can only conduct so many interviews a day to a good level.

- Analysis delays: We can do analysis as each interview comes in and then us AI to find patterns, rather than having to do it all in one block like with traditional interviews

- Cost barriers: Each traditional interview costs time, money, and coordination overhead

We believe that by automating as much as possible on the ‘admin’ side of the user research, it will allow us to focus on the human-necessary part, reviewing the transcript and creating the analysis.

The AI dynamic can change how people respond. Some users might actually be more honest with AI researchers. There's no one’s feelings to worry about, no judgement to navigate. It's not without its flaws; some people feel uncomfortable being recorded, while others find AI interactions strange or impersonal. This isn't a universal solution, it's an additional tool that works for some users and some research contexts.

Our voice AI researcher, Emily, isn't perfect. We’ve noticed she sometimes over-clarified responses and could feel repetitive. There were technical hiccups and moments that felt distinctly artificial.

But here's what matters, Emily can be available whenever a user wants to give us feedback. Even an imperfect AI conversation can generate insights that written forms never would have captured (because the users probably wouldn’t fill them out).

This reflects a fundamental truth about AI development, it's probabilistic, not deterministic. Emily gets better through real conversations with real users, we all learn from each interaction.

The real breakthrough is just as operational as it is technical. Voice AI research allows product teams to automate the logistics of user interviews while preserving the insights that only conversation can provide.

Imagine conducting user interviews at scale, gathering insights without constraints. The compound effect on product decisions becomes enormous when user insights flow as freely as prototypes. You can use this alongside your human-strategic knowledge, data points and internal research to make better decisions.

The companies winning in today's market aren't those with perfect research processes, or those waiting for the AI to do everything. They're those with consistent research habits. Ideally, we could remove enough friction that gathering user insights becomes as routine as checking analytics.

It's about making "some research" possible when normally there would be no research at all.

The question isn't whether AI can conduct research as well as humans. The question is whether you can afford to keep making decisions without doing user research.

The Reality of AI-Powered User Interviews

The AI dynamic can change how people respond. Some users might actually be more honest with AI researchers. There's no one’s feelings to worry about, no judgement to navigate. It's not without its flaws; some people feel uncomfortable being recorded, while others find AI interactions strange or impersonal.

This isn't a universal solution, it's an additional tool that works for some users and some research contexts.

Our voice AI researcher, Emily, isn't perfect. We’ve noticed she sometimes over-clarified responses and could feel repetitive. There were technical hiccups and moments that felt distinctly artificial.

But here's what matters: Emily is available whenever a user wants to give us feedback. Even an imperfect AI conversation can generate insights that written forms never would have captured (because the users probably wouldn’t fill them out).

This reflects a fundamental truth about AI development, it's probabilistic, not deterministic. And, because Emily gets better through real conversations with real users, we all learn from each interaction.

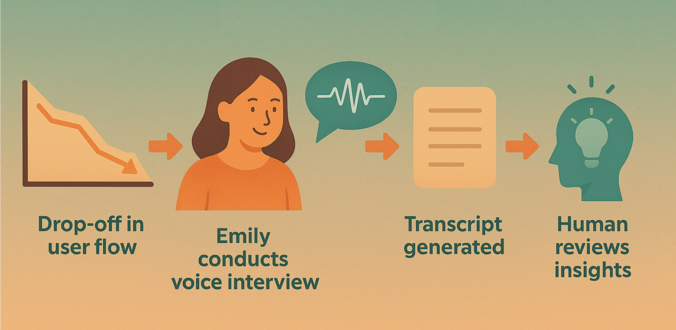

The Operational Breakthrough: Scaling User Research

The real breakthrough is just as operational as it is technical. Voice AI research and conversational AI platforms allow product teams to automate the logistics of user interviews while preserving the insights that only conversation can provide.

Imagine conducting user interviews at scale, gathering insights without constraints. The compound effect on product decisions becomes enormous when user insights flow as freely as prototypes. You can use this alongside your human-strategic knowledge, data points and internal research to make better decisions.

The companies winning in today's market aren't those with perfect research processes, or those waiting for the AI to do everything. They're those with consistent research habits. Ideally, we could remove enough friction that gathering user insights becomes as routine as checking analytics.

It's about making "some research" possible when the alternative is “no research at all.”

The question isn't whether AI can conduct research as well as humans: it’s whether you can afford to keep making decisions without doing user research.

Emily automates the logistics of user interviews

Building Our Voice AI Researcher with ElevenLabs

We created Emily using ElevenLabs' AI interview platform after evaluating several conversational AI options.

The reasons we chose ElevenLabs:

- Rapid prototyping: Impressive results in 10 minutes, working version in hours

- No-code approach: Our product team could build and iterate without engineering resources

- Strong documentation: Clear guidance on prompt optimization and AI improvement

For setup, we fed ElevenLabs' documentation into Claude alongside our research requirements to generate Emily's initial prompt. Within hours, we had a functional AI researcher.

But the first iteration felt awkward to talk to.

The issue was conversation flow — user researchers need to start and lead conversations naturally, not just respond to prompts. Most of our work ended up being testing and tweaking the prompt to get this right.

The key was adding natural chit-chat and small talk at the beginning. Without this, Emily felt cold and robotic, diving straight into questions rather than building rapport like a human researcher would.

Emily's interface: ready to collect user feedback 24/7

Creating an AI Researcher That Feels Human

Rather than just building a generic chatbot, we carefully crafted Emily's personality and methodology based on proven user research principles. Here's how we approached the key design decisions:

Creating an Authentic Research Persona

We designed Emily as a warm, empathetic researcher with a soft Scottish accent — approachable and trustworthy.

She uses natural conversational fillers and pauses, making interactions feel genuine rather than robotic. The goal was someone users would feel comfortable opening up to about their real experiences.

Structured Interview Flow

Emily follows a research methodology grounded in "The Mom Test" and the "5 whys" technique. Her conversations follow a clear structure:

- Natural chit-chat to build rapport

- Clear consent and expectations setting

- Deep exploration of user motivations and pain points (not just feature feedback)

- Thoughtful summarization and next steps

Adaptive Questioning

Rather than rigid scripts, Emily adapts her vocabulary to match users' technical expertise and follows interesting threads when users mention specific challenges. She focuses on understanding the problems users face in their daily lives, not validating product features.

Implementing Voice AI Research in Your Product Team

User research automation isn't about replacing human researchers — it's about removing the friction that prevents research from happening at all. When scheduling a 10-minute user interview requires hours of coordination, most teams simply skip the research.

Our AI voice researcher Emily represents a different approach: making user insights as accessible as checking your analytics dashboard. The compound effect of this accessibility could fundamentally change how product decisions get made.

The question isn't whether AI can conduct perfect research. It's whether you can afford to keep building without any user research at all.