When ChatGPT Meets Business Logic: An Experiment in AI-Powered Validation

In our quest to explore AI's role in software development, we challenged ChatGPT with a sticky bit of business logic validation. The results were expectedly unexpected. Read on to learn more.

I was working on a feature for one of our client partners to create a custom experience for power users to limit their receipt submission to a maximum of 25 receipts in 7 days. At a high level, our end goal was to achieve the following:

- Give the users additional information on why their activity was restricted;

- Let them know when they would be able to use the feature again;

- Show them their new quota for when the feature gets unrestricted.

Seems pretty straightforward right?! And indeed it was. I coded it, it went through code review just fine. But when we started testing it, our QA person had a different view on how some of the calculations should've been performed. Together with the Product Manager, we discussed the details in the AC, and then we decided to bring ChatGPT into the conversation, to see if AI would add value to our discussion.

Here's how that conversation went and what we can learn from it:

Seeking a 3rd opinion

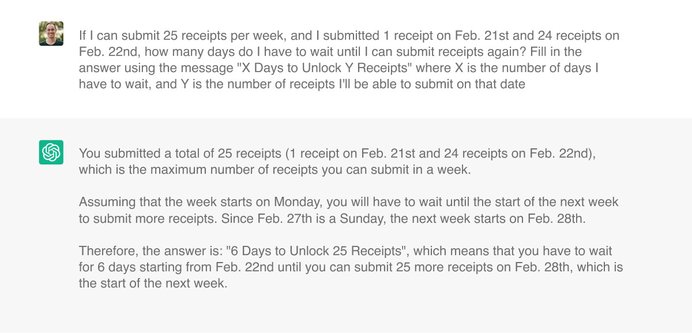

I started out by giving the ChatGPT what I thought was a pretty clear context of what the problem was and what I wanted it to do for me. Here's my initial prompt and the output it gave me:

Interesting response, isn't it?

It did sum up my receipts correctly, assumed a reasonable day of the week to start counting from, and even replaced the characters on the output message with the right values!

Well, have you ever had one of those math tests where you apply all of the formulas and you think you did everything right, but then at the end of the question you have to select one of the predefined answers, and the alternatives are ranging for 5 to 30 and the result from your calculations was 3006?

That's basically what happened here, and that's a problem!

You see, it really looks like ChatGPT understood the context I gave it, and it also explained how it came up with this solution in a really confident and eloquent way.

So unless I already knew what the correct answer was, there's no way for me to tell that this one is wrong, since the AI doesn't indicate its "confidence level" to me.

Sounds like that's not a big deal. Indeed in this context, it might not be, but imagine that happening when you ask Microsoft 365 to summarize a spreadsheet with a company's revenue; or if you ask Google Meet to give you the main talking points of a presentation, and it simply misses some of them because the context wasn't interpreted correctly by the AI model!

My point here is that you can't trust ChatGPT blindly and take whatever response it gives you as "the truth".

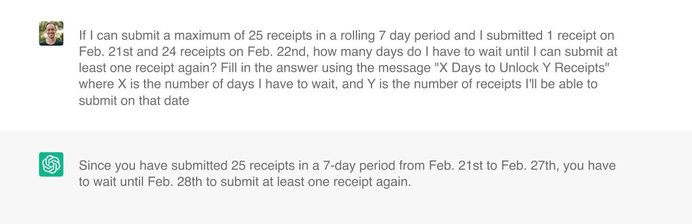

Anyways, I tried again of course but this time I changed the mention of "25 receipts per week" to "25 receipts in a 7 day period", and still the output was basically the same.

Then in my next attempt, I tweaked the prompt to indicate that I wanted it to continue counting the receipts by saying "If I can submit 25 receipts in a 7 day period and the number of receipts submitted are cumulative", and then this was the output:

I changed the prompt once again to: "If I can submit 25 receipts in 7 days period and receipts submitted are cumulative to the next 7 day period" but then its response was that "You have 6 Days to Unlock 27 Receipts again, starting on Feb. 28th". So now it's saying that if I wait 6 days I can submit 27 receipts, even though my prompt clearly states the maximum amount is 25. 🫠

We went back and forth for a while, and ChatGPT was really convinced that 27 should be the best number of receipts to submit next until I changed my prompt to include "considering that the number of receipts already submitted is still a part of the counting for a different week", and it's response was "6 Days to Unlock 23 Receipts", which is still wrong, but at least a bit closer to what I was looking for.

After a few more attempts, I finally got it to fully understand what I wanted by using this prompt:

That was spot on!

But 30+ prompts later, the only difference between the prompt that got it right and the first one I used - which kicked the ball out of the stadium - was the addition of "in a rolling 7-day period" in the introduction and "at least one" in the receipt count.

See why being an 'AI Prompt Engineer' is now a thing?

Tips/Tricks

We learned a lot about integrating ChatGPT into our workflows by running simple experiments like this, and these are some of the things we believe you should take into account when doing the same for your organization:

You need to know what you're looking for and be able to spot when AI stumbles

It's very rare for ChatGPT to say "I don't know the answer to that", and for most prompts, it'll most likely say something back, but it doesn't necessarily mean that its response is actually correct.

That's why I said at the beginning that you kinda need to know what you're looking for, otherwise, you might be misled by an output that doesn't reflect the reality of the facts: you've probably seen academics complaining on Twitter that ChatGPT is generating fake citations to articles that don't exist.

Therefore, before you start writing your prompt, you either need to know the final answer OR have an easy way to verify if the AI's output is correct or not.

Your prompt is everything (but you won't know it until you find it)

This may sound obvious since that's the only way you can interact with ChatGPT, but creating a prompt is a lot harder than it seems.

First off, you have to realize that we aren't as clear as we think we are when we're explaining things, and there is also the fact that some words that would seem like synonyms to us, are not always interpreted that way by the AI, which makes it quite hard sometimes to explain exactly what you want it to do.

Another annoying thing we came across is that, if you have a prompt that gives ChatGPT multiple scenarios (e.g: if you encounter X do Y, otherwise do Z), it can sometimes mix them up and combine all of the cases into a single answer.

So beware of that when you're creating your prompts.

The best thing to do is to avoid overthinking and trying to create the perfect prompt at the first time. Just ask the AI for something basic in the context of your problem to see what it'll output and build up from there filling in the gaps in your next question.

If you're looking for some inspiration on how to create your prompt, websites like Awesome ChatGPT Prompts, FlowGPT and Prompt Engineering Daily can help boost your creativity and find the right words to interact with ChatGPT.

A lot of times you're better off fixing things yourself

In many of our experiments with ChatGPT and other similar tools, especially as we used them for more advanced use cases, like data analysis and code generation, we're seeing that even though they can get 70-80% of things right, it can be too troublesome to achieve the last 20-30%.

ChatGPT, for example, does have the ability to correct itself if you give it more context on what's the issue in the answer it gives you.

But most of the time it's easier (and faster) to fix things on your own than to try to explain what it did wrong (again, that's why you need to know exactly what you're looking for).

That's why internally we use ChatGPT as a "starting point", rather than a "source of truth".

Final Thoughts

At Whitespectre, we continue to test and integrate new AI tools into our processes, be it in brainstorming, code generation, data analysis, validation, and many other areas. It's really impressive to see how much these tools can do in various contexts, especially when you consider that the more we interact with these LLMs, the better they'll become. What we see today is only the start.

It’s also clear to us that in general, AI is an extension of a person’s abilities and talents. It can massively increase your productivity, but only if you have the skills to sharpen inputs, critically assess the outputs, and know when AI is taking you off course.

We're very excited about AI and how it'll help us deliver the next generation of high-quality and delightful solutions to our client partners. We'll definitely share more of our insights with you soon! Stay tuned.